AI Broader Impact Statements

Analysis

➡️ Try out analyzing this data with LLooM on this Colab notebook.

Task: Investigate anticipated consequences of AI research

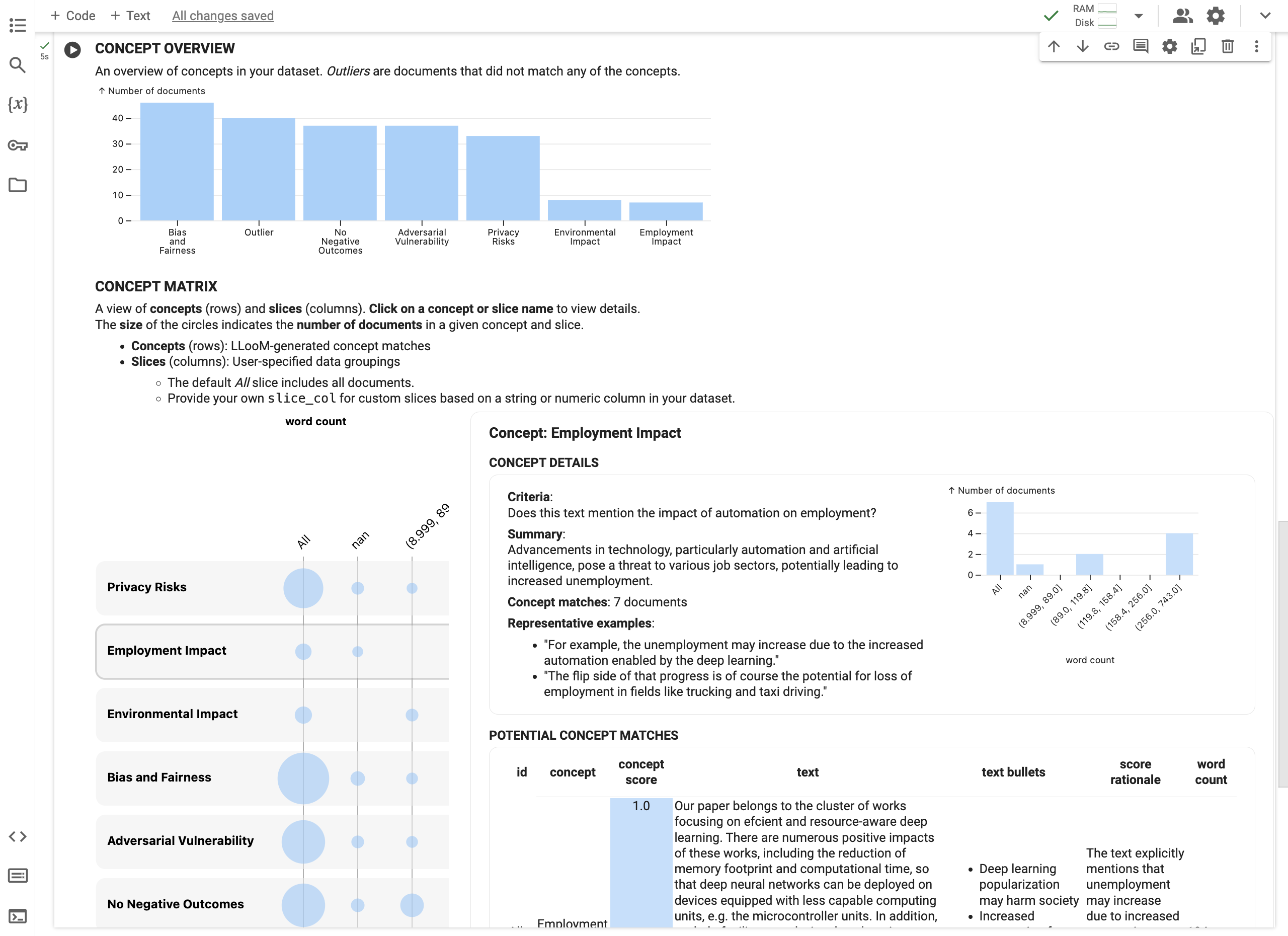

Advances in AI are driven by research labs, so to avoid future harms, today's researchers must be equipped to grapple with AI ethics, including the ability to anticipate risks and mitigate potentially harmful downstream impacts of their work. How do AI researchers assess the consequences of their work? LLooM can help us to understand how AI researchers discuss downstream outcomes, ethical issues, and potential mitigations. Such an analysis could help us to uncover gaps in understanding that could be addressed with guidelines and AI ethics curricula.

Dataset: NeurIPS Broader Impact Statements, 2020

In 2020, NeurIPS, a premier machine learning research conference, required authors to include a broader impact statement in their submission in an effort to encourage researchers to consider negative consequences of their work. These statements provide a window into the ethical thought processes of a broad swath of AI researchers, and prior work from Nanayakkara et al. has performed a qualitative thematic analysis on a sample of 300 statements.